Uploading Images to AWS S3 and Storing S3 Key in DynamoDB using AWS Lambda - Streamlit Web App

Photo by Pawel Czerwinski on Unsplash

I recently worked on a Streamlit web application project that allows users to upload a photo and a completed form.

Streamlit is an open-source app framework primarily used by Machine-learning and Data Science communities to quickly develop and share their projects on web applications. Streamlit allows us to code in Python and has a limited but useful set of features to spin up a web application quickly.

Since I did not have much experience with front-end development especially in JavaScript, HTML, or CSS, I opted to use Streamlit.

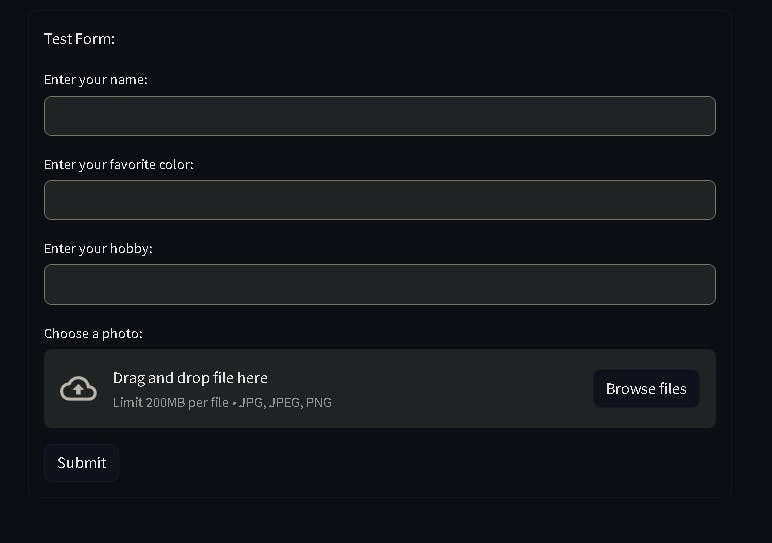

To understand how easy it is to get a Streamlit app running, I will provide a sample code to create a form with the file upload functionality:

import streamlit as st

with st.form(key="my_form"):

st.write("Test Form:")

name = st.text_input("Enter your name: ")

fav_color = st.text_input("Enter your favorite color: ")

hobby = st.text_input("Enter your hobby: ")

photo = st.file_uploader("Choose a photo:", type=['jpg', 'jpeg', 'png'])

submitted = st.form_submit_button("Submit")

if submitted:

st.success("Form submitted.")

Install Streamlit pip install streamlit and run the application with the below example:

streamlit run app.py

Streamlit runs on localhost port 8501 by default:

The application is rendered in the following manner:

Once the user submits the form, we can perform some basic input validation and send the data as an HTTP POST request to the backend.

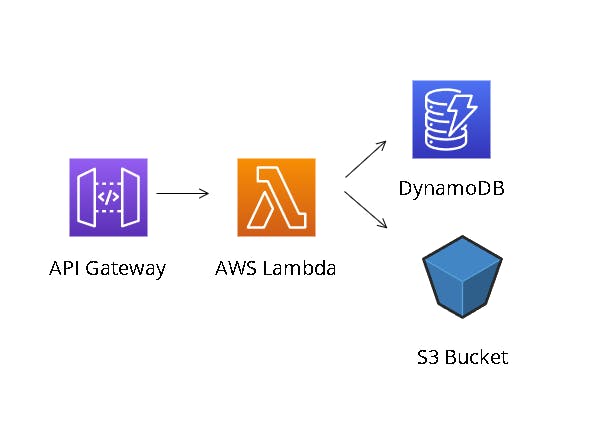

The text attributes of the form are stored in a DynamoDB table and the photo is stored in an S3 bucket.

To associate the user's data stored with the corresponding image in the S3 bucket, we created a unique "S3 Key" for each image and stored it in the DynamoDB table.

The backend in this project consists of an API Gateway's HTTP API integrated with an AWS Lambda function (also written in Python). The Lambda function extracts the user attributes and the image, creates the S3 Key, and stores the text values in the DynamoDB table and the image in the S3 bucket.

Image built using: online.visual-paradigm.com

The code in Streamlit to send the photo to the HTTP API is as follows:

# Include the code to display the form here.

if submitted:

import base64

import json

encoded_image = base64.b64encode(photo.read()).decode('utf-8')

photo_upload_api_url = "https://test.execute-api.us-east-2.amazonaws.com/photo_upload"

photo_payload = {

"name": name,

"image": encoded_image,

"email": email

}

photo_headers = {

'Content-Type': 'application/json',

'Authorization': access_token # This is the access token for API Gateway if authorization is enabled.

}

try:

photo_response = requests.post(photo_upload_api_url, data=json.dumps(photo_payload), headers=photo_headers)

st.success("ID upload successful. 👍")

except Exception as err:

st.error(f'An error occurred: {err}')

The Lambda integration attached to the HTTP API will trigger the Python code similar to the one below to create new records in the DynamoDB table and upload the image into the S3 bucket.

Example Lambda function:

import json

import boto3

import base64

from io import BytesIO

def lambda_handler(event, context):

http_method = event['requestContext']['http']['method']

# If the request is a POST, then we know that the user has submitted the form.

if http_method == 'POST':

photo_response = handle_dynamo_db(event)

return {

'statusCode': 200,

'body': json.dumps(photo_response)

}

else:

return {'statusCode': 200}

def handle_dynamo_db(event):

"""

This function inserts the user's name, email, and S3 key into DynamoDB.

"""

body = json.loads(event['body'])

dynamodb = boto3.client('dynamodb') # This is the DynamoDB client.

image = body['image']

name = body['name']

email = body['email']

s3_key = f"{name}-{email}-id-key.jpg" # This is the S3 key for the image.

upload_to_s3(image, s3_key) # Upload the image to S3.

update_response = dynamodb.put_item(

TableName="test-table",

Key={

'name': {'S': name},

'email': {'S': email},

's3_key': {'S': s3_key}

}

)

return update_response

def upload_to_s3(base64_image, s3_key):

"""

This function uploads the image to S3.

"""

s3 = boto3.client('s3') # This instantiates the S3 client.

bucket_name = 'test-bucket'

image_bytes = base64.b64decode(base64_image)

# Create a file-like object from the bytes

image_file = BytesIO(image_bytes)

# Upload the image to S3

s3.upload_fileobj(image_file, bucket_name, s3_key)

Some notes for implementation:

Ensure that the Lambda function has the necessary permissions to 'put-item' to the DynamoDB table and upload objects to your S3 bucket.

If there are errors in the Lambda execution, you will see HTTP code

500responses returned on the Streamlit app.Use AWS Cloudwatch logs to monitor your lambda function execution and check for any error messages.

Check the DynamoDB table to ensure that the user data and the S3 key are stored as expected.

The S3 key is the file name of the image stored in the S3 bucket (hence we add the .jpg suffix to the key name).